Transfer Learning Applications Across Industries

Transfer learning—the practice of repurposing a pre‑trained model for a new but related task—has reshaped the AI landscape. By reusing knowledge learned from large datasets, companies can build high‑performance models faster, reduce costs, and overcome data scarcity. This post dives into real‑world applications across industries, technical fundamentals, challenges, and the future of transfer learning.

Healthcare: From Image Diagnostics to Genomics

- Radiology & medical imaging: DenseNet, ResNet, and EfficientNet models pre‑trained on ImageNet are fine‑tuned to detect tumors, fractures, and pneumonia in X‑ray and CT scans. Accuracy improvements of 5–10% over baseline models are common.

- Pathology: Whole‑slide image classification benefits from fine‑tuning VGG‑16 and Inception on histopathology datasets, yielding early cancer detection.

- Drug discovery & genomics: Language‑style models like BERT are adapted (BioBERT) for protein‑sequence embeddings, accelerating target identification.

Finance: Risk Assessment, Fraud Detection, & Asset Pricing

| Use Case | Model | Outcome |

|—|—|—|

| Fraud detection | BERT fine‑tuned on transaction logs | 15% reduction in false positives |

| Credit scoring | Gradient‑boosted trees pre‑trained on Kaggle competition data | 8% increase in predictive accuracy |

| Market sentiment | RoBERTa on news headlines + fine‑tuning | Faster response times for algorithmic trading |

Key benefits include rapid deployment of models in real‑time systems and improved regulation compliance.

Manufacturing & Industrial IoT

- Predictive maintenance: Pre‑trained CNNs on ImageNet are adapted to classify images of wear and tear on bearings, reducing downtime.

- Quality inspection: Transfer learning with YOLOv5 fine‑tuned on specific defect datasets boosts defect detection rates.

- Robotics: Reinforcement learning policies pre‑trained in simulation transfer to real robots, shortening learning loops.

Open‑source tools like NVIDIA’s TAO Toolkit accelerate such pipelines.

Agriculture: Precision Farming & Crop Monitoring

- Crop disease detection: MobileNetv2 models fine‑tuned on plant pathology images help farmers spot diseases early.

- Yield prediction: Transfer learning from satellite imagery datasets (e.g., Sentinel‑2) applies to local crop fields, improving forecast accuracy.

- Drone analytics: Vision‑transformer models pre‑trained on generic aerial datasets adapt to orchard mapping.

These applications enable sustainable farming practices and higher yields.

Automotive & Autonomous Driving

- Object detection: YOLOv5 fine‑tuned on Cityscapes and nuScenes datasets detects pedestrians and road signs with higher precision.

- Lane detection: Pre‑trained ResNet‑50 models adapt to varying lighting and weather conditions, boosting lane‑keeping systems.

- Semantic segmentation: DeepLabv3+ transfer learning enhances road‑scene understanding for autonomous navigation.

Industry players like Waymo and Tesla use such transfer strategies continuously.

Retail & E‑Commerce

- Recommendation engines: Transformers pre‑trained on large text corpora are fine‑tuned on customer reviews, improving personalized suggestions.

- Visual search: Feature extractor networks pre‑trained on ImageNet adapt to product images, leading to faster search results.

- Demand forecasting: LSTM models pre‑trained on commodity sales data transfer to brand‑specific forecasting.

Adoption cuts down time‑to‑market for new AI features.

Media & Entertainment

- Content recommendation: Collaborative filtering models initialized with pre‑trained embeddings from large-scale usage data lead to better engagement.

- Video summarization: GPT‑style models fine‑tuned on subtitle and script data produce coherent highlights.

- Speech synthesis: Voice‑conversion models pre‑trained on multi‑speaker corpora adapt to brand‑specific voices.

These tools drive higher user retention.

Energy & Sustainability

- Smart grid forecasting: Pre‑trained ARIMA and LSTM models fine‑tuned on local grid data help utilities predict load demands.

- Renewable integration: Convolutional models trained on weather satellite images transfer to wind farm performance prediction.

- Anomaly detection: Autoencoders pre‑trained on normal sensor data detect equipment faults, reducing outages.

Transfer learning supports efficient transition to renewable grids.

Education & Personal Learning

- Adaptive learning platforms: BERT models pre‑trained on large corpora fine‑tuned on question‑answer pairs personalize study pathways.

- Automated grading: Vision models initialized on ImageNet adapt to handwritten exam grading, increasing throughput.

- Content recommendation: Collaborative filtering with pre‑trained user embeddings matches learners with relevant courses.

These innovations scale education access worldwide.

Technical Foundations

Pre‑training Datasets

- ImageNet for vision tasks.

- Wikipedia + books for language tasks.

- COCO, Pascal VOC for object detection.

- Large‑scale sensor datasets for time‑series tasks.

Common Strategies

| Strategy | Description |

|—|—|

| Feature extraction | Freeze early layers, fine‑tune fully connected layers. |

| Fine‑tuning | Re‑train entire encoder with a smaller learning rate. |

| Domain adaptation | Use adversarial losses to align source and target feature distributions. |

| Multi‑task learning | Jointly train on related tasks to share representations. |

Architectures

- CNNs (ResNet, EfficientNet) for images.

- Transformers (BERT, GPT‑family) for text and vision.

- Graph neural nets for relational data.

- Recurrent nets (LSTM, GRU) for sequential data.

Recent advances in transfer learning

Challenges & Mitigation

- Negative transfer – When source knowledge harms the target. Mitigation: use domain‑adversarial training or selective layer freezing.

- Data heterogeneity – Varying data quality across industries. Mitigation: robust pre‑processing and synthetic data augmentation.

- Privacy & security – Sensitive industry data limits model sharing. Mitigation: federated transfer learning and differential privacy.

- Computational cost – Large pre‑trained models require GPU resources. Mitigation: knowledge distillation and pruning.

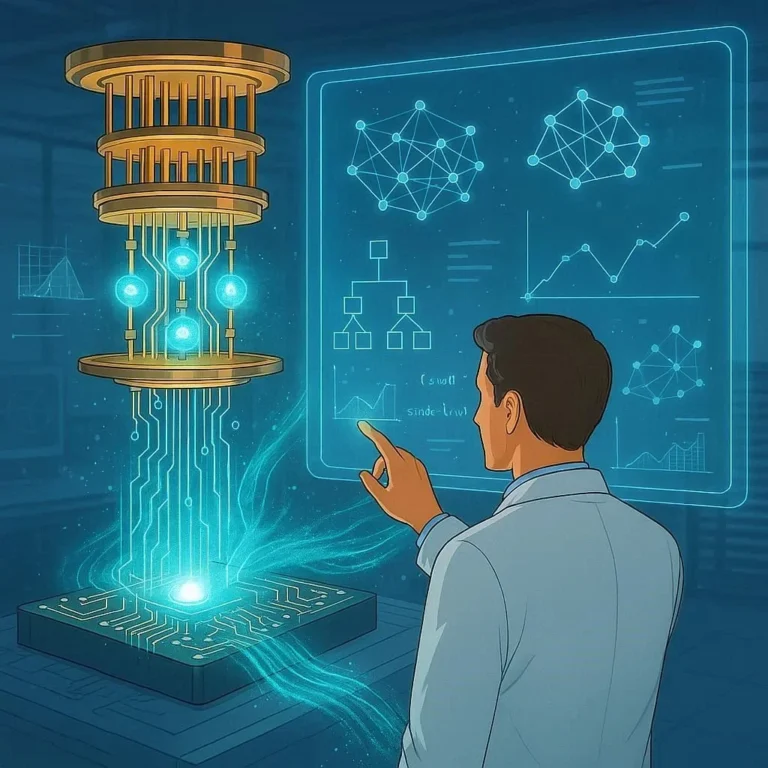

Future Trends

- Meta‑learning: Models learn how to learn quickly from few examples.

- Continual learning: Seamlessly incorporate new data streams without forgetting.

- Automated architecture search: Generate domain‑specific architectures automatically.

- Cross‑modal transfer: Transfer knowledge from vision to language and vice‑versa.

- AI‑for‑AI: Using AI models to optimise transfer learning pipelines.

These directions promise even faster, more efficient domain adaptation.

Conclusion

Transfer learning is not a niche technique—it is becoming the backbone of AI deployment across every sector. From early disease detection in healthcare to real‑time fraud prevention in finance, the ability to harness pre‑trained knowledge saves time, cuts costs, and opens new possibilities for innovation.

Take Action: Evaluate your industry’s data challenges. Identify a low‑cost, high‑impact use case for transfer learning and start prototyping today. Whether you’re a data scientist, product manager, or C‑suite executive, embracing transfer learning can accelerate your organization’s AI roadmap and cement a competitive edge.

Ready to experiment? Contact our AI specialists to discover the best pre‑trained models and fine‑tuning strategies for your industry.